The following is a paper notes article on “Tango: Distributed Data Structures over a Shared Log” by Balakrishnan et al. from SOSP 2013. The article focus on the main body of the paper and I will cover Tango’s streaming and transaction support in a separate article, sometime in the future.

I have covered this paper before covering its companion paper “CORFU: A Shared Log Design for Flash Clusters” also by Balakrishnan et al. from NSDI 2012. I expect its paper notes article will answer many of the open questions in this article.

Summary

Tango is system for replicating a data structure to provide linearizable semantics and fault tolerance. Tango is framed as system for application metadata, whose requirement include a need for fault tolerance, high availability, fairly strong consistency/ordering requirements.

[quick note on terminology: unlike the usual terminology to describe systems like Raft and VRR, Tango uses the term “client” to refer to what similar systems call a server and “external client” to what similar systems call a client. We shall use the same terminology as Tango. ]

The basics

At its heart, Tango, takes SMR and separates the replication of the log from the hosts running the application. In traditional SMR, each host runs the application itself (an in-memory deterministic state machine) and stores a local copy of the log. With the help of a consensus protocol, the local copy of the log kept upto date and clients are provided with linearizable semantics. In Tango, only the application runs on these host. The log is instead distributed (using chain replication) across an a set of storage nodes (e.g. an SSD cluster). Tango clients do not communicate directly with message passing (as is the case of SMR), instead they interact via the shared log.

SMR is often used with leader driven consensus, this means that only the leader may commit an operation to replicated log and if the leader was to fail the system is unavailable to clients until a new leader is chosen. In contrast, Tango’s approach allows clients to directly replicate commands to the log. The leader is replaced by a dedicated sequencer and the sequencer state is soft so systems can continue to operate (at higher latency) if the sequencer was to fail.

Tango uses a stream abstraction to shard operations by the data structure on which they operate. This is used to clients to efficiently “ignore” operations which do not apply their data structures.

Shared log (aka Corfu)

The shared log (aka Corfu) is composed of clusters of storage nodes. Within each cluster, each storage node is a replica and operates on a read many/write once basis. Global address x maps to cluster (x mod n) and local address (x div n), where n is the number of clusters. Clients read and write to clusters using chain replication, this is also how failures are handled.

The sequencer stores the global address of the log tail. If this fails, it can reconstructed by querying storage nodes for local address of their log tail. The paper also mentions that each cluster also has its own dedicated sequencer, I could not understand when this is used.

Multiple clients writing to one address is resolved safely and Corfu exposes an operation for filling in unused addresses

Application interface

In many classic protocols, the external client must locate a master/coordinator to handle the request on their behalf. In Tango (like some other leaderless systems like EPaxos) any external client can communication with any client. Often SMR uses master leases or a similar mechanism to handle read requests without fully replicating them (unlike write requests) or having to pass them to the master. Corfu supports a check operation so Tango can check (and update if necessary) the local view before applying the update. Transactions are supported with speculation, object version numbers and a commit/abort stage before an up call to application is made

The API for interfacing with Tango seems similar to that of SMR. An application must provide a callback to apply a operation and has access to method to add read or write operations. An application may provide a checkpointing mechanism and may call discard on any log prefix.

Related systems

Alternatives to Tango are systems such as Zookeeper, Raft and Chubby. In the introduction, the authors highlight that such system are not sufficient since they only support specific data structures and/or do not support operations over multiple data structures. Whilst I support this idea, I would argue that in at least some existing systems, operations over multiple data structure can be supported by considering the multiple data structures as one. This allows operations over multiple data structure but comes at a high cost of adding a total ordering between operations when only a small partial ordering is required. Since Tango support streaming, it already has access to a partial ordering on operations instead of just using a total ordering. P-SMR by Parisa Marandi demonstrates the efficiently improvements possible by utilizing this information. Another argument given in the paper is that adopting SMR after the development of an application “often requires a drastic rewrite of the code”.

Evaluation

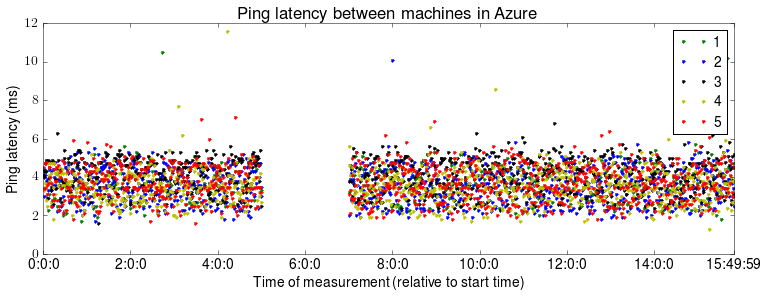

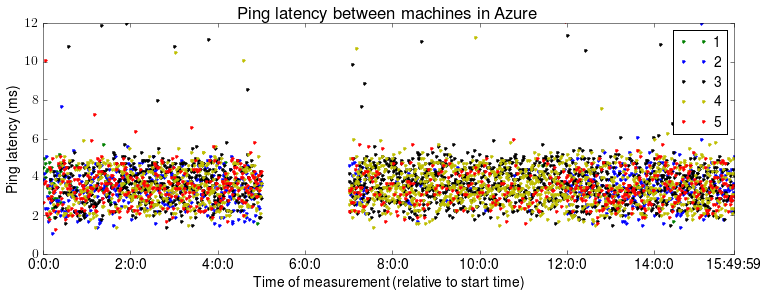

The experimental evaluation of Tango focuses on measuring throughput and/or latency whilst varying the number of clients and ratio of write to read requests. I would be interested to see some results on the recovery time for various patterns of storage node or sequencer failures. The initial evaluation considers three scenarios: (a) 18 storage nodes and 1 client, (b) 18 storage nodes and 2 clients, (c) 2 or 18 storage nodes and 1 to 18 clients. Generally speaking, the results are very much as you might expect: increasing request load increases request latency, writes place a much high burden on the system than reads and read only clients scale linearly until storage nodes are saturated.

The paper does not mention any formal proof of correctness (only that the sequencer is not required for correctness). Log compaction is supported with the forget call and checkpointing. Dynamic membership support for clients is trivial due to the design of Tango but I would be interested how Corfu and its variant of chain replication handles storage node failures.

It is not fair to directly compare Tango to systems using SMR with a majority quorum consensus algorithm (such as Raft). Tango’s scalability comes (in part) from the sharding of the replication log into clusters. This technique can be applied to SMR (see S-SMR) too. It would be interesting to see how Tango performs with the storage node, application node and sequencer co-located on same the host. However (particularly with current trend towards containers, docker and unikernels) separating systems like Tango into function specific instances/hosts has some significant benefits such as decoupling the number of storage and application hosts or fine gained provisioning for the sequencer compared to the storage/application hosts.

Conclusion

Each new paper that presents a high availability, fault-tolerance systems seems to build upon many of the old concepts (such as Paxos, SMR and timeouts for failure detection), in combination with a few novel ideas. In this case, Tango builds upon chain replication, for replicating log entries and the typical API for SMR. This is first time I have seen a soft state sequencer used in such as system as well as the divide between the storage nodes and application nodes. Overall, I really like this paper and its nice to see some novelty in a space crowded with variants of Paxos + SMR.