We have been hard at work over the last few months, designing and securing the PAWS network. This is the outline of talk that I gave a few weeks back. The full powerpoint is available on Slideshare

Intro

Hi Everyone, My name’s Heidi and today I’m going to walk you through some of ideas behind Project PAWS, the Public Access Wifi Service. It should take about 15 mins to run you through our aims, the obstacles and we are trying to address them.

Internet Access – A Human Right ?

In July 2012, the United Nations unanimously backed a notion stating that “All people should be allowed to connect to and express themselves freely on the Internet”. Our aim is to address this and extent internet access to all, particular regarding access to essential public services.

Lowest Cost Denominator Networking

Mostly internet access today is done using “best effect” access, given the infrastructure available and the current state of the network, provide the best access possible, which of course comes at a cost. This creates only 2 possible levels of access: those who have internet access and those who don’t.

We aim to extend this, introducing a new level of basic access called less-than-best effort access, its has lower user requirements, thus reducing costs, helping to make internet access more widely available. According to the Internet World Stats survey of October 2012, more than 10% of the UK population don’t have internet access in their homes. This is what we hope to address.

Trial Deployment: Aspley Nottingham

Before tackling the issue nationally, we want to tackle it in one small area of the UK. For this, we have chosen Ashley in Nottingham. We chose this because according to the Nottingham Citizens survey, around 1/3 of citizens don’t have internet access. This is 3 times the national average, so if we can tackle the problem here, then hopefully we can tackle the problem anywhere.

The aim is to provide internet access to 50 citizens of Aspley who don’t currently have access. Our approach, is to enable citizens of Aspley with internet access to share their broadband with the wider Aspley community. For this we will be aiming to find 50 broadband sharers who are willing to help get 50 other Aspley citizens online. Once we have found our 50 sharers and our 50 new internet citizens, we hope to run a system to provide them with access for 3 months. We will then analyse the results, with the view to running similar project elsewhere (we have begin work with the University of Aberdeen towards a rural PAWS trial)

So far, this has already raised a range of interesting research questions such as:

- Is broadband sharing is most cost effective method for local councils to get citizens online ?

- What level of internet access is most useful (but still feasible) to new internet citizens ? i.e. what bandwidth do citizens expect and what internet services will they use PAWS for?

- How many sharers are required to get new citizens online ? for this trial we have assumed one sharer per citizen, but were we right to assume this?

- Are people willing to share their bandwidth and what (if any) legal issues does this introduce ?

The question that I am personally most interested in and will talk about today is: How can we build a system which allows individuals to share their broadband in a secure but easy to use manner that ensures that the shares internet experience is not notably affected ?

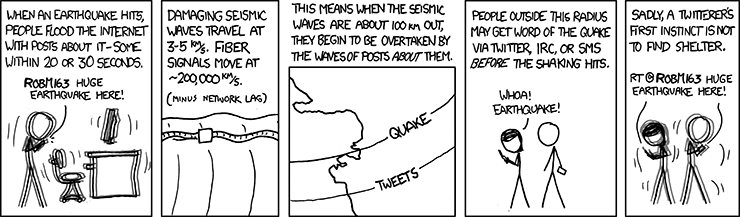

Broadband Sharing is nothing new …

Broadband sharing is nothing new, there are quite a few examples of similar projects, they are commonly known as “Wireless Community Networks”. Wireless Community Networks are were communities of individuals group together to form a co-op where individuals opt to share their broadband over WiFi and in turn they can use the WiFI of the other members of the community.

FON is the worlds most popular Wireless Community Network, with over 8 million members of the community. This demonstrates that individuals are willing to share their broadband, provided its sufficiently incentivised. FON demonstrates that its possible to build a system which allows individuals to share their broadband in a secure but easy to use manner that ensures that the shares internet experience is not notably affected. Job done ![]()

Well not quite… FON allows individuals with internet access to extend their access. PAWS aims to get individuals without internet access online, but these individuals (who make up 1/3 of the people in Aspley) cannot be part of a community like FON. FON does offer some limited access to individuals who don’t have a broadband connection but at a high cost (£6 for 90 mins at the BT FON Spot). PAWS aims to build on the ideas behind Wireless Community Networks like FON, but with the aim of providing internet access to essential public services to all.

The PAWS Model

PAWS aims to work with local partners such as local councils, community groups and charities to make access online available to all. Now for technical stuff. To meets our aims, we need to build a system with following properties:

- Ease of Use: Sharers need to have a simple set-up so that setup does not dis-incentive individuals from becoming sharers and the network need to be easy-to-use for citizens too, as this may be there first ever time online

- Priority: Sharers internet traffic must always be given priority over that of a PAWS citizen, to ensure that a sharers internet access is not notably affected by the sharing their broadband with the PAWS citizens

- Confidentiality, Integrity & Availability: Providing confidentiality means ensuring that PAWS citizens and sharer cannot see each other traffic. Providing integrity means ensuring there nobody can intercept a citizens traffic (i.e. by using a rogue WiFi hotspot). Providing availability means giving citizens reliable internet access in the face of hardware failures

- Authentication, Authorization & Accounting: Like an Internet Service Provider (ISP), we will be responsible for ensuring that PAWS citizens use the network responsibility so we need to have a record of who is using the network

- Scalability: Though this initial trial involves 50 sharers and 50 citizens in Aspley, we want to consider how this can scale up nationally with many linked PAWS communities up and down the country.

Ease of Use

Firstly let’s consider the setup and install process for PAWS sharers. Most of the home routers that households in the UK use are provided by their ISPs. Often these are simply plugged in to the internet and left on the default settings. It wouldn’t be feasible for us to replace households home routers nor is it scalable for us to re-configure everyone’s home router. We could try to share WiFi in software, using a program such as Connectify to turn a sharers PC or laptop into a WiFi hotspot. This would require the sharer to leave their computer on, require the supported WiFi card and require us to support a range of PC platforms.

Therefore we will introduce new hardware, that will connect to the sharer network and enable broadband sharing in a secure and fair manner. Connecting using an ethernet cable, provides the best bandwidth and most reliable connection between the new hardware for sharing with PAWS and the sharer home network. For the hardware, we have chosen to use a router, called NETGEAR N600, WNDR3800 for its compatibility with software that we would like to run.

Routers, like this one, run special software called firmware. This is similar to how computer run an operating system like Windows 7 or MacOS. The firmware that will be running on the PAWS router is called OpenWRT.

This PAWS router will advertise the name “PAWS”, citizens can then use the internet by connecting to the WiFI network called “PAWS” in some areas of Aspley in Nottingham.

Priority

In order for us to make a PAWS sharers spare bandwidth available to the PAWS citizens, we need to measure the bandwidth available and use this information to decide how much bandwidth to make available to PAWS citizens. To measure the bandwidth, we run regular tests from the PAWS router. You can run a test right now to see how much bandwidth you get using a website such as www.speedtest.net orwww.broadbandspeedchecker.co.uk. For PAWS, we will be measuring the bandwidth using a tools called “BISMark”, made by a team at Georgia Institute of Technology.

In the 3 month Aspley deployment we will spend the first month, measuring the bandwidth available and the next two month making this spare bandwidth available to the citizens of Aspley. We will ensure that PAWS citizens only use this spare bandwidth by throttling the bandwidth at the PAWS router in the sharers homes.

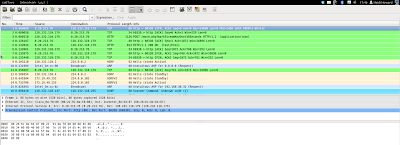

Authentication

We need to have a record of who has connected to the PAWS network and what they have used the network for. Therefore, we need all PAWS citizens to login when they connect to the PAWS network. In the same way that you need to login to WiFi at a cafe, train station or airport. For this we will use a software called RADIUS, which is also commonly used for managing the log in’s at public WiFi hotspots. RADIUS is used to for logging into many services online and therefore we could link PAWS to local services to allow PAWS citizen to be automatically registered and use the same password as they would for the local service. This will not be implemented in the trial deployment in Aspley

Accountability & Authorization

When you connect to the internet, you are known by number referred to as an “IP address”. You can find out the IP address that you are using to visit the internet now by visiting whatismyipaddress.com. IP addresses are allocated to households by their ISP and they can be used by the ISP to identify the source of any malicious activity online. PAWS citizens need to have a different IP address when they access the internet via a PAWS router than the sharers normal internet traffic. For this we use a Virtual Private Network (VPN), which sends all of the PAWS citizens internet traffic to another computer on the internet before sending it to the internet. This means that a all PAWS citizen will have a IP address that is different to that of the PAWS sharers. We can combine the VPN with RADIUS authentication so that all PAWS citizen log into the VPN with there own username and password.

Connecting to the PAWS network will therefore involve two steps: connecting to the WiFi network called “PAWS” and then login into the PAWS VPN.

A firewall, is a set of rules that govern what traffic can pass through a device, and we will run a firewall on the PAWS router to ensure that the only way to access the internet via the PAWS network is using the PAWS VPN

Confidentiality & Integrity

We need to ensure that PAWS sharers and citizens cannot see or change each others traffic. Wireless traffic is often poorly secured, this is why you shouldn’t check your bank account when using WiFi in a cafe. The use of VPN (as just described) means that we can easily encrypt PAWS citizens traffic from the citizen device like a laptop or mobile phone to a secure computer on the internet. This secure computer that all the PAWS internet traffic goes via is known as VPN server and hosted by local company ENMET.

Scalability

Though Aspley is the first ever trial of PAWS, we hope that there will be many more to follow. We plan to enable PAWS citizens to login to PAWS within any of these deployments without needing to register

Limitations

Our system isn’t perfect and there are some open issues to address. Firstly, not all devices are VPN compatible and on some devices which are, set up can be difficult. Fortunately in the Aspley trial we will have the PAWS team on hand to help PAWS citizens, though this isn’t scalable across the country. There are different type of VPN, offering different trade offs, unfortunately there is one type of VPN that is the all round best solution. In our own initial tests, we have found that some home routers, that have provided by UK ISPs, block VPN traffic by default. This is easy to fix by logging into the home router and changing a few setting but it does require the sharer to know there router’s username and password. The PAW’s routers currently cost about £110 each, which is fairly expensive. All PAWS traffic in the Aspley deployment is routed via a single VPN server, meaning that there is a single point of failure. In the Aspley deployment, we will welcome all PAWS sharers to becomes PAWS citizens but it would be nice if we could have greater incentives to share.

Ideas for Future Work

It would be nice to extend the incentives for members of the community to become PAWS citizens, potentially by introducing a two tier system where PAWS citizens who are also PAWS sharer are able to get more bandwidth on the PAWS network. Another potential improvement is to allow PAWS citizens who are also PAWS sharer, to use the PAWS router as a VPN server for there traffic. In the future, I would like explore the potential for replacing VPN with other security connectivity method such as WPA Enterprise. The PAWS citizens user experience could be streamlined by creating a mobile application to automatically connect to VPN when the user connects to a PAWS router, this application could then be extend to allow users to view a coverage map and add feedback about quality of access available at different points.

Thanks for Listening